Learn all you need to know about mainframe to cloud migration: why you need it, the challenges you might face, and options for mainframe modernization.

The OL Hub is built to solve many existing legacy integration problems with an eye to the future (Part 2 of 4)

The first blog in this series focused on why we decided to create a SaaS platform. This one shows the benefits of that platform, which is called the OL Hub.

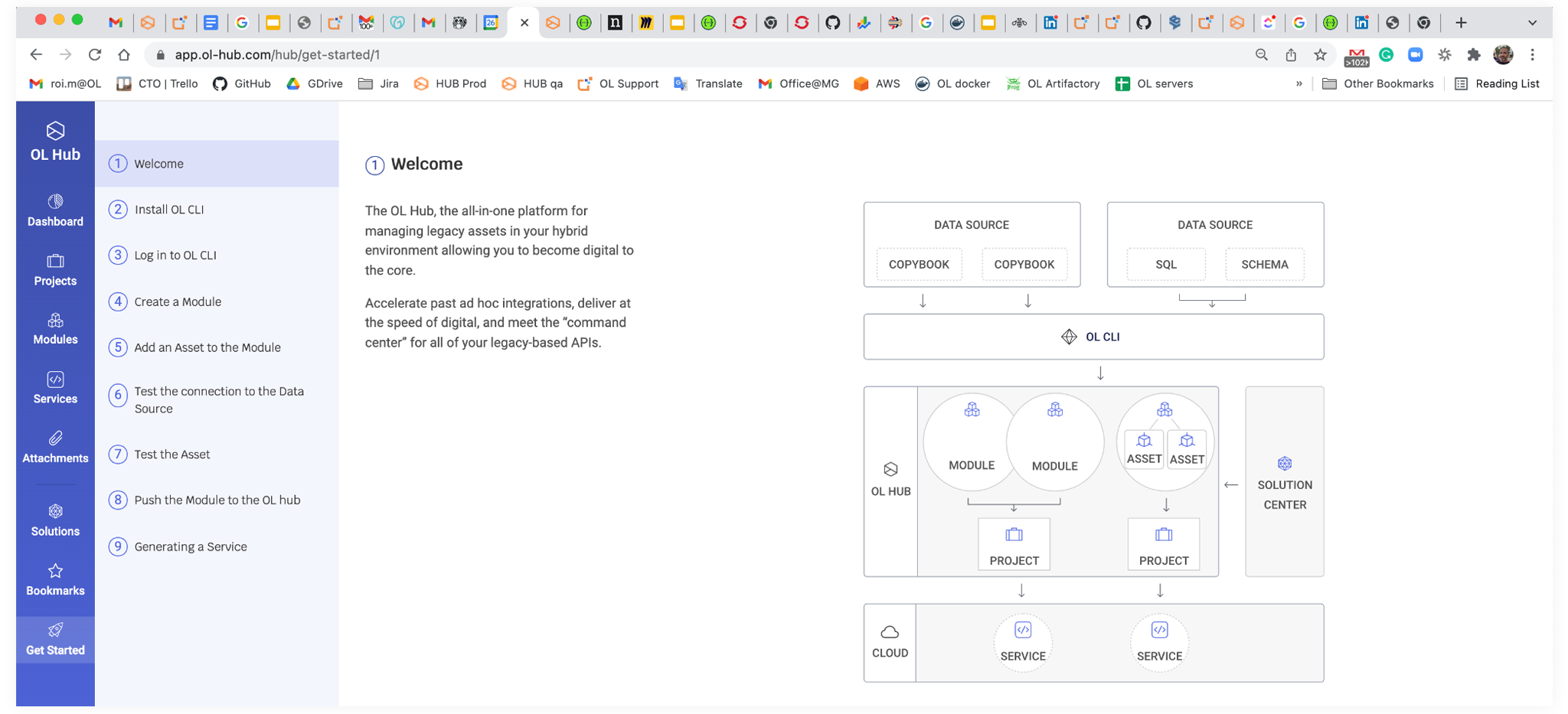

OL Hub is a repository of all the backend artifacts and their related APIs. It enables users to move towards a design-time/DevOps model.

OL Hub is designed as a SaaS multi-tenant repository that maintains backend artifacts & APIs. It enables designing, browsing, tagging, documenting, and generating services.

In the last blog, I discussed an issue where OL Hub needs access to backend artifacts but those are inside the company’s network and hence require security access and other complexity. We worked around this by building a CLI that gets installed inside the network. We chose a CLI because it is easy to install anywhere with a basic command line. The CLI parses and tests backend artifacts.

In the last blog, I discussed an issue where OL Hub needs access to backend artifacts but those are inside the company’s network and hence require security access and other complexity. We worked around this by building a CLI that gets installed inside the network. We chose a CLI because it is easy to install anywhere with a basic command line. The CLI parses and tests backend artifacts.

The CLI then creates basic JSON representations of the interfaces to the legacy system and pushes those JSON artifacts into the OL Hub. The CLI also can do a number of other things that also can be done inside the Hub like generate APIs and even support deployment. Given its powerful functionality, the CLI enables DevOps automation.

The one disadvantage of a CLI is it really is a developer power feature. To simplify usage we plan to add a Web UI and APIs to support backend operations.

The terminology we chose was:

- Module (for backend app representation)

- Asset (for backend operation)

- Project (for representing a microservice or function)

- Contract (for the API interface)

- Methods (representing the endpoints)

A method can contain a flow engine (developed inside the Hub).

The terminology decoupled the integration process from the actual technology deployed to (e.g., Java/C#/Node/REST/Kafka/Serverless). This is one of the most powerful capabilities of OL Hub, and a big differentiator from other iPaaS or traditional middlewares.

We created a new design-time engine. Our old engine parses backends artifacts and generates Java code with very little separation between the platform and connectors. It isn’t practical for a SaaS platform.

We develop in Kotlin (a cross-platform programming language). Kotlin is designed to interoperate fully with Java. We also use Vertx (a polyglot event-driven application framework that runs on the JVM). R&D embraces these technologies mainly due to its powerful async features, simplicity, and growing popularity.

Next, we developed our flow engine, based on JSON metadata. In the IDE we map based on UI and annotation generation, but orchestration logic is accomplished by Java code written by our users. So we needed a light way to build orchestration.

A flow is a set of steps with any input/output structures, each step performing either light logic in javascript, backend operations, and mappings from input/output and/or other steps. Everything persists in JSON.

A flow “language” can’t provide full flexibility in orchestrations, so we created code “entry points” so that the user can add native language logic between/before/after flow steps.

Then we created plugins for generators and deployers, that can be developed without dependency on the platform.

With testing, we found another big gap: How to test invocation of a backend program, without generating code? We wanted to be language agnostic even in our testing process. So we refactored our runtime.

Our runtime used to work with POJOs (Plain Old Java Objects) and now we need to run our runtime connectors with JSON. So a major refactoring was done, and now all the runtime works with JSON (and POJOs) as the runtime is shared by our IDE full code solutions.

This was an opportunity to better design our RPC (remote procedure call) runtime infrastructure. This infrastructure is used to call backend system operations. We needed to support JSON, asynchronous calls, support multiple responses, and just be easier for users. We kept the existing RPC SDK compatible with the IDE, as we decided to use the same runtime for the IDE-generated artifacts.

The UX/UI team was working on creating an overall Hub UI & orchestration canvas to design flows, but we wanted something valuable quickly. So we added support for auto-generate contracts. Every asset input/output from a module becomes a method in a contract, with 1 to 1 mapping to the method input/output. This is an option for creating contracts today.

It provides real value immediately and doesn't rely on the Hub UI to build APIs, just to view them at the first stage.

The IDE code generator generates full code, but it is “one way” and introduces a problem of “one source of truth”. Nevertheless, it is still a valuable generator for backward compatibility with the IDE.

To support DevOps of development speed and automation we built the option to export OPZ (OpenLegacy Project Zip) concept and added support for generating it from the command line. This way developers export a project as part of their end design and it doesn’t require loading the Hub all it. It isn’t connected directly to OL Hub.

The exported OPZ also simplified the work when designing inside the Hub, but the question we asked ourselves is why do I need to export every time we change anything to support the API testing process? So when using the Hub, we changed the system to load the metadata during project startup and the testing problem was solved!

Another initiative R&D started was OL cloud runtime, which enables any current and future OL client (including existing IDE clients) to host their runtime microservices. We built it first as an offering for our less-regulated clients although any clients can use it. This is a dynamic cluster in the cloud, providing build, deploy, and hosting services. It also is a great way to demo the product with a single click “deploy”.

We then ramped up the migration of our existing IDE connectors in parallel to R&D work. OpenLegacy supports many different legacy platforms.

We created a demo for almost every supported backend legacy system and built banking & insurance backend apps for each, making it easier for prospects to test it out before connecting to their backend. The bulk of our clients & prospects are either banking or insurance so it made sense to create such, and it was a great loopback to the maturity of the SaaS platform. We provide ready scripts using the OL CLI to parse those apps and run them as APIs in a fully automated way.

We also built sandboxes and scripting for common deployment environments: AWS, Openshift, Azure, and GCP.

The next blog in the series shows why the OL Hub is built to solve many existing legacy integration problems

We’d love to give you a demo.

Please leave us your details and we'll be in touch shortly